Fabric Workspace Storage Analysis

Challenge: How can I see the underlying storage and files being used in my Fabric items like Lakehouses and Warehouses and check if files still exist even after I delete data from tables?

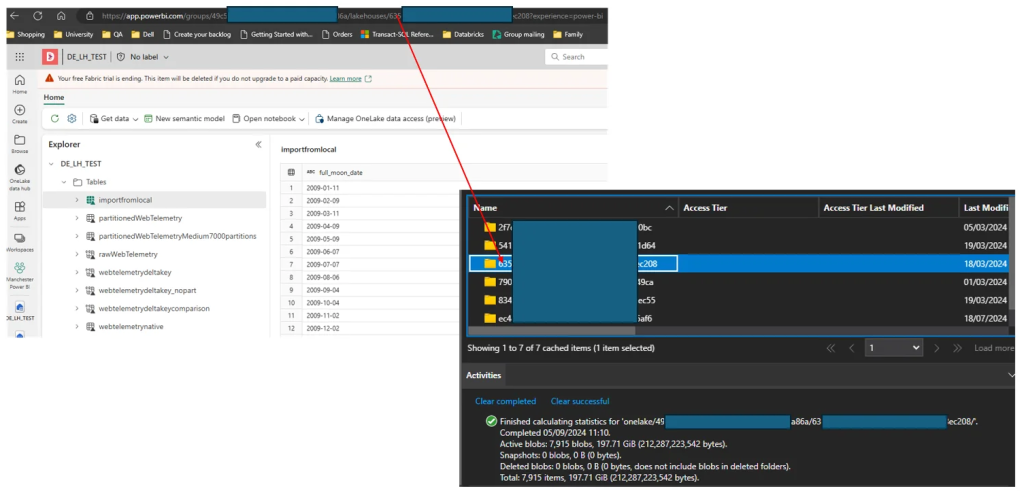

My Solutions: I use the Azure Storage Explorer to connect to a workspace and check what is using space.

Integrate OneLake with Azure Storage Explorer – Microsoft Fabric | Microsoft Learn

Open Azure Storage Explorer, click the Connect (plug) icon and choose “ADLS Gen2…” For the directory URL I just browse to the workspace using the GUID of the workspace (in the URL in Power BI).

E.G if my workspace url is https://app.powerbi.com/groups/00712662-3b84-40c2-ad7c-fb397ab8ea89 then my connection string in Azure Storage Explorer will be:

https://onelake.dfs.fabric.microsoft.com/00712662-3b84-40c2-ad7c-fb397ab8ea89

Then you can right-click a folder and choose “Selection Statistics” to see the size of the folder. EG I can drill down into a lakehouse, to the Tables folder and then select a table to see it’s size. You can also drill into the folder to see if there are still parquet files there.

(check out this blog too about getting sizes using PowerShell Getting the size of OneLake data items or folders | Microsoft Fabric Blog | Microsoft Fabric)

So for the webtelemetrydeltakey_nopart table above, it’s parquet file total is 88GB in the Tables folder. If I run a DELETE on that table and remove all rows, the folder size is still 88GB even when I run a VACUUM command on the table.

If I need to override the default 7 days (168 hours) retention period then I need to set a spark config to do this and then specific a lower retention in my VACUUM command. Please have a read of the Delta documentation so you’re comfortable with doing this: Remove old files with the Delta Lake Vacuum Command | Delta Lake

spark.conf.set("spark.databricks.delta.retentionDurationCheck.enabled", "false")

%%sql

VACUUM webtelemetrydeltakey_nopart RETAIN 0 HOURS