External Tables vs Views…Which to Use and Why?

Serverless SQL Pools do not contain data (unlike Dedicated SQL Pools) but rather allow you to cast structure over data in Azure Storage, Azure Data Lake Gen1 & 2, Cosmos DB, and the Power Platform Dataverse. There are currently 2 supported objects which can be persisted to a Serverless SQL Pools database, External Tables and Views. What are the features and functionality of each? Which should you use and in what scenarios are each useful in? In this blog post we’ll look at the differences between the functionality of External Tables and Views and scenarios each object is suited to. We’ll also look at using External Tables and Views when working with the Delta Lake format.

We’ll look at both External Tables and Views now with a comparison table at the end of this blog.

Blog Overview

- Views

- Features & Functionality

- Useful Scenarios

- Example Syntax

- External Tables

- Features & Functionality

- Useful Scenarios

- Example Syntax

- CREATE EXTERNAL TABLE AS SELECT

- Using Delta Format with External Tables and Views

- Views

- External Tables

- Creating Tables using Spark and Querying with Serverless

- Create Table in Spark SQL

- Query Table using Serverless SQL Pools

- Comparison Table

- Conclusion

- References

Blog Summary

In this blog we look at the differences between Views and External tables and useful scenarios for each. We see that Views support partition pruning whilst External tables do not. We then test using the Delta and Parquet format with Views and External Tables and see that if the source Delta data is partitioned, we can use the partition column to filter and partition prune using Views but not External Tables. However, if the External Table has been created in a Spark database using Spark Pools then we are able to query that table using Serverless SQL and are able to partition prune successfully.

Views

Serverless SQL Pools supports the creation of SQL Views within a database which can then be queried exactly the same as a view in an Azure SQL database, Dedicated SQL Pool or a SQL Server instance. Views are useful in abstracting the syntax necessary to connect to data in an Azure Storage account or Cosmos DB. For example a Data Engineer could create a set of Views for Data Analysts and Business Intelligence Developers to use without those roles needing to understand the underlying source storage connections.

Features and Functionality

- Create a query-able view in a Serverless SQL Pools database to abstract the connection to the data source

- No need to define the columns or data types (schema information) at view creation time

- Can embed the filepath() function as a column to allow source folder partition pruning

Useful Scenarios

- Needing to quickly create query-able objects over source data without explicitly stating schema

- When source data is partitioned and is large enough that partition pruning is required

- When only a specific set of columns are required to be exposed from the data source

Example Syntax

Let’s now look at several SQL View creation syntax examples to highlight the various options available when creating Views.

The following code creates a view without defining any column names or data types plus we use the full URL to the root folder without requiring a DATA_SOURCE (URL to the Azure Storage account and Container) to be defined. The 2 * wildcards at the end of the BULK option tell Serverless SQL to traverse the folder structure. This is a quick and fast method of creating a view.

CREATE VIEW LDW.vwBlogSalesOrdersNoDataSource

AS

SELECT *

FROM OPENROWSET(

BULK 'https://storage.dfs.core.windows.net/datalakehouse/sourcedata/salesorder/**',

FORMAT = 'CSV',

PARSER_VERSION = '2.0',

HEADER_ROW = TRUE,

FIELDTERMINATOR ='|' ) AS fctIn the following code we still don’t define any columns from the underlying data but this time we use a DATA_SOURCE. Creating a DATA_SOURCE with a base URL to Azure Storage means we can re-use that location across objects and just need to define the folder structure rather than the full URL. We also use the filepath() function to create 3 columns in the View which will expose the name of the folder at the specified level. We can use these 3 columns in a WHERE clause to partition prune which will target only those folders required to satisfy the query.

CREATE EXTERNAL DATA SOURCE ExternalDataSourceDataLake

WITH (

LOCATION = 'https://storage.dfs.core.windows.net/datalakehouse'

);

CREATE VIEW LDW.vwBlogSalesOrders

AS

SELECT *,

CAST(fct.filepath(1) AS INT) AS FilePathYear,

CAST(fct.filepath(2) AS TINYINT) AS FilePathMonth,

CAST(fct.filepath(3) AS DATE) AS FilePathDate

FROM

OPENROWSET

(

BULK 'sourcedata/salesorder/OrderYear=*/OrderMonth=*/OrderDatePartition=*/*.csv',

DATA_SOURCE = 'ExternalDataSourceDataLake',

FORMAT = 'CSV',

PARSER_VERSION = '2.0',

HEADER_ROW = TRUE,

FIELDTERMINATOR ='|'

) AS fctIn this next code segment we are now defining columns we would like to expose, as our example data has a header row we use the HEADER_ROW = TRUE option then we are able to define column names in the SELECT statement. Notice that we still do not define any data types, just the column name as Serverless SQL will derive the data type automatically.

CREATE VIEW LDW.vwBlogSalesOrdersColumns

AS

SELECT OrderID,

CustomerID,

OrderDate

FROM

OPENROWSET

(

BULK 'sourcedata/salesorder/**',

DATA_SOURCE = 'ExternalDataSourceDataLake',

FORMAT = 'CSV',

PARSER_VERSION = '2.0',

HEADER_ROW = TRUE,

FIELDTERMINATOR ='|'

) AS fctAlthough deriving data types automatically is useful, it can introduce inefficiency as Serverless SQL may choose a data type with a byte size far large than the actual data type and therefore increase data processed costs.

We can also use the WITH option to add schema definition to the view. In the code example below we use WITH to define the columns we would like to select from the source files and also define the data type.

CREATE VIEW LDW.vwBlogSalesOrdersWith

AS

SELECT * FROM

OPENROWSET

(

BULK 'sourcedata/salesorder/**',

DATA_SOURCE = 'ExternalDataSourceDataLake',

FORMAT = 'CSV',

PARSER_VERSION = '2.0',

HEADER_ROW = TRUE,

FIELDTERMINATOR ='|'

)

WITH

(

OrderID INT,

CustomerID INT,

OrderDate DATE

) AS fctIf our source data does not contain a header row we can still define column names and assign data types, we now use the FIRSTROW option to tell Serverless SQL where the first row of data is located. In the WITH option we define column names, data types, and the position within the source files (E.G. first column is 1).

CREATE VIEW LDW.vwBlogSalesOrdersWithPosition

AS

SELECT * FROM

OPENROWSET

(

BULK 'sourcedata/salesorder/**',

DATA_SOURCE = 'ExternalDataSourceDataLake',

FORMAT = 'CSV',

PARSER_VERSION = '2.0',

FIRSTROW = 1,

FIELDTERMINATOR ='|'

)

WITH

(

OrderID INT 1,

CustomerID INT 2,

OrderDate DATE 7

) AS fctExternal Tables

We now turn our attention to External Tables. Like Views, External Tables allow us to query source data however there are a few limitations in that we must create DATA_SOURCE and FILE_FORMAT objects, we are unable to partition prune using the filepath() function, and we must explicitly define the column names and data types.

With regards to partition pruning, we do note in the Microsoft documentation that External Tables only support partition pruning (folder partition elimination) when created via Spark pools and used in Serverless SQL Pools queries (we will look at this later in this blog post).

Features and Functionality

- Create a query-able table in a Serverless SQL Pools database to abstract the connection to the data source

- Must define the column names and data types (schema information) at view creation time

- Cannot use the filepath() function to partition prune, any WHERE filtering is done over the whole source data

Useful Scenarios

- Connecting to source data when partition pruning is not required

- Where source data can accept filter pushdown such as Parquet to mitigate no partition pruning

- Smaller datasets such as reference data or dimensions

- Write the results of a SELECT statement back to Azure Storage using CETAS

Example Syntax

Let’s look at an example of creating an External Table. We need to create an addition object for the FILE_FORMAT option.

CREATE EXTERNAL FILE FORMAT CsvWithHeaderFormat

WITH (

FORMAT_TYPE = DELIMITEDTEXT,

FORMAT_OPTIONS (

PARSER_VERSION = '2.0',

FIELD_TERMINATOR = '|',

FIRST_ROW = 2,

USE_TYPE_DEFAULT = FALSE,

STRING_DELIMITER = '"')

);

CREATE EXTERNAL TABLE LDW.BlogSalesOrders

(

OrderID INT,

CustomerID INT,

SalespersonPersonID INT,

PickedByPersonID INT,

ContactPersonID INT,

BackorderOrderID INT,

OrderDate DATE,

ExpectedDeliveryDate DATE,

CustomerPurchaseOrderNumber INT,

IsUndersupplyBackordered BIT,

PickingCompletedWhen DATE,

LastEditedBy INT,

LastEditedWhen DATE

)

WITH (

LOCATION = 'sourcedata/salesorder/**',

DATA_SOURCE = ExternalDataSourceDataLake,

FILE_FORMAT = CsvWithHeaderFormat

)CREATE EXTERNAL TABLE AS SELECT

External Tables can also be used to load/save the results of a SELECT statement to Azure Storage by using the CREATE EXTERNAL TABLE AS SELECT syntax. This is particularly useful if you with to transform source data and store as another file format such as Parquet. There are limitations in that we are unable to define a destination partition scheme nor control how many rows per file are created or the number of files, this is all done automatically.

Example Syntax

We’ll now create an External Tables with the results of a SELECT statement from a View. We will create a Parquet file format to use in the WITH options.

CREATE EXTERNAL FILE FORMAT SynapseParquetFormat

WITH (

FORMAT_TYPE = PARQUET

);

CREATE EXTERNAL TABLE LDW.AggregateSalesData

WITH (

LOCATION = 'destinationdata/salesorderaggregates/',

DATA_SOURCE = ExternalDataSourceDataLake,

FILE_FORMAT = SynapseParquetFormat

)

AS

SELECT...Using the Delta Lake Format with External Tables and Views

Currently the ability to query Delta Lake format data is in preview and we are able to create Views and External Tables over this data. What is interesting is that if the source Delta data has an existing partitioning scheme, for example Order Date, then we do not need to use filepath() in our View definition to partition prune. We can query the source data and use the partition schema column and this will successfully partition prune.

We’ll create a Delta Lake structure using Spark SQL in a Notebook connected to a Spark Pool in the following code and then test creating a View and also an External Table over this data in Serverless SQL Pools.

CREATE TABLE delta_salesorder

USING delta

PARTITIONED BY (OrderDatePartition)

LOCATION 'abfss://container@storage.dfs.core.windows.net/spark/deltalake/salesorder'

AS SELECT OrderID,

CustomerID,

SalespersonPersonID,

PickedByPersonID,

ContactPersonID,

BackorderOrderID,

CAST(OrderDate AS DATE) AS OrderDatePartition,

CAST(OrderDate AS DATE) AS OrderDate

FROM raw_salesorderViews over Delta Lake

We can now create a View in Serverless SQL Pools and use the location where the Delta format data was saved to. Note that the OPENROWSET function supports partition pruning when the source partition column is used to filter in the WHERE clause.

CREATE VIEW LDW.vwDeltaSalesOrders

AS

SELECT *

FROM

OPENROWSET(

BULK 'spark/deltalake/salesorder/',

DATA_SOURCE = 'ExternalDataSourceDataLake',

FORMAT='DELTA'

) fctWe can now query the view in Serverless SQL Pools to read the Delta Lake data without requiring a Spark Pool to be active (or even exist). If we use the OrderDatePartition column in the WHERE clause to filter, we can see data processed is reduced. If we do the same but use the non-partition column OrderDate then although we get the same results we do not see partition pruning.

--Baseline query which shows 64MB data processed

SELECT CustomerID,

COUNT(*) AS SalesOrderCount

FROM LDW.vwDeltaSalesOrders

GROUP BY CustomerID

--Filter using the partition schema column we see 1MB data processed (this will be rounded up to 10MB minimum however)

SELECT CustomerID,

COUNT(*) AS SalesOrderCount

FROM LDW.vwDeltaSalesOrders

WHERE OrderDatePartition = '2016-06-03'

GROUP BY CustomerID

--Filter using the non-partitioned column we see the same results but 30MB data processed so no partition pruning

SELECT CustomerID,

COUNT(*) AS SalesOrderCount

FROM LDW.vwDeltaSalesOrders

WHERE OrderDate = '2016-06-03'

GROUP BY CustomerIDExternal Tables over Delta Lake

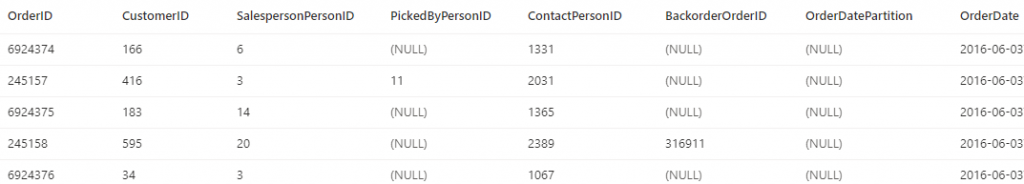

Although we can create External Tables successfully over Delta Lake data, we are unable to take advantage of partition pruning. When querying the table, the partition column used in the Delta data returns NULL and if used in a WHERE clause it does not return any data.

CREATE EXTERNAL FILE FORMAT SynapseDeltaFormat

WITH (

FORMAT_TYPE = DELTA

);

CREATE EXTERNAL TABLE LDW.DeltaSalesOrders

(

OrderID INT,

CustomerID INT,

SalespersonPersonID INT,

PickedByPersonID INT,

ContactPersonID INT,

BackorderOrderID INT,

OrderDatePartition DATE,

OrderDate DATE

)

WITH

(

LOCATION = 'spark/deltalake/salesorder/',

DATA_SOURCE = ExternalDataSourceDataWarehouse,

FILE_FORMAT = SynapseDeltaFormat

)When querying this External Table we see NULL for the partition scheme column and if filtering we do not see any data being returned.

SELECT TOP 5 * FROM LDW.DeltaSalesOrders

Creating Tables using Spark and Querying with Serverless

There is the concept of shared metadata between Serverless SQL Pools and Spark Pools which allows querying a table created in Spark but using the Serverless engine without needing an active Spark Pool running. We can create external tables in a Spark database and then use those tables in Serverless SQL Pools to read data. A simple process would be to create the table in Spark (e.g. using Spark SQL) and then shut the Spark cluster down and use the table in Serverless SQL Pools. We can do this for both Delta format tables and regular external tables in Spark. Data can be added to the underlying data source and this would be reflected when querying using Serverless.

Create Table in Spark SQL

The following Spark SQL code can be run in a Notebook attached to a Spark Pool. Once the table has been created, the Spark pool can be terminated (or can be left to pause itself depending on the timeout setting). This is the same Spark SQL code seen earlier except we are now using parquet rather than delta, however the process and outcome are the same.

CREATE TABLE raw_salesorder

USING parquet

PARTITIONED BY (OrderDatePartition)

LOCATION 'abfss://container@storage.dfs.core.windows.net/spark/deltalake/salesorder'

AS SELECT OrderID,

CustomerID,

SalespersonPersonID,

PickedByPersonID,

ContactPersonID,

BackorderOrderID,

CAST(OrderDate AS DATE) AS OrderDatePartition,

CAST(OrderDate AS DATE) AS OrderDate

FROM raw_salesorderQuery Table using Serverless SQL Pools

We will use 2 queries used in the Views over Delta Lake section to query the external table created in Spark but we are using the Serverless SQL Pools engine to query. We will see that when using the OrderDatePartition column in the WHERE clause it partition prunes successfully, as indicated in the MS documentation.

--Baseline query which shows 57MB data processed

SELECT CustomerID,

COUNT(*) AS SalesOrderCount

FROM sparkdatabase.dbo.delta_salesorder

GROUP BY CustomerID

--Filter using the partition schema column we see 1MB data processed (this will be rounded up to 10MB minimum however)

SELECT CustomerID,

COUNT(*) AS SalesOrderCount

FROM sparkdatabase.dbo.raw_salesorder

WHERE OrderDatePartition = '2016-06-03'

GROUP BY CustomerIDComparison

Here is a table comparing features and functionality between External Tables and Views.

| Functionality | External Table | Views |

| Persisted object in database | Yes | Yes |

| Must Define Schema (Column Names & Data Types) | Yes | No |

| Supports partition pruning | No (Yes if Spark table) | Yes |

| Can be used to write data back to storage | Yes | No |

| Requires a DATA_SOURCE object | Yes | No |

| Requires a FILE_FORMAT object | Yes | No |

| Supports FILE_FORMAT | Yes | No |

| Supports DATA_SOURCE | Yes | Yes |

| Cosmos DB Supported | No | Yes |

| Supports DELTA format | Yes | Yes |

| Supports DELTA partition pruning | No (Yes if Spark table) | Yes |

| Nested Types and JSON | No | Yes |

Conclusion

During this blog we have seen that Views offer more flexibility over External Tables and that External Tables cannot partition prune unless the table is created in a Spark database. We have seen that we can use both Views and External Tables with the Delta format, however only Views (which use the OPENROWSET command) support partition pruning if the Delta data has a partition scheme. If the External Table is created in a Spark database using a Spark Pool then we can query this table in Serverless SQL and it supports partition pruning. If we wish to cast a simple structure over source data then a View gives us the flexibility to do this, however an External Table allows us to actually write data to Azure Storage.

References

- https://docs.microsoft.com/en-us/azure/synapse-analytics/sql/develop-tables-external-tables

- https://docs.microsoft.com/en-us/azure/synapse-analytics/sql/develop-tables-cetas

- https://docs.microsoft.com/en-us/azure/synapse-analytics/sql/create-use-views

- https://docs.microsoft.com/en-us/azure/synapse-analytics/sql/create-use-external-tables

- https://docs.microsoft.com/en-us/azure/synapse-analytics/sql/create-external-table-as-select

- https://docs.microsoft.com/en-us/azure/synapse-analytics/sql/query-specific-files

- https://docs.microsoft.com/en-us/azure/synapse-analytics/synapse-link/concept-synapse-link-cosmos-db-support

- https://docs.databricks.com/delta/best-practices.html#language-sql

- https://docs.microsoft.com/en-us/azure/synapse-analytics/metadata/table