Databricks Data Engineer Associate Certification Overview

I’ve been looking at taking the Databricks Data Engineer Associate Certification (the Databricks page for the certification is here) as I’ve noted the certification covers areas such as the overall Databricks platform, Delta Lake and Delta Live Tables, the SQL endpoints, dashboarding, and orchestration – perfect for any work I would do in Databricks. The certification is targeting people who have up to 6 months experience working with Databricks. There is the Spark Developer certification but for people like me who are Spark-light in terms of skillset, it can seem quite daunting.

The certification covers areas such as the overall Databricks platform, Delta Lake and Delta Live Tables, the SQL endpoints, dashboarding, and orchestration

Why do this exam? Well, I like the inclusion of Delta Lake which can be used in many engines…such as Serverless SQL Pools in Synapse Analytics. So being able to focus on how to work with Delta is very useful. There’s also Delta Live Tables too, a great addition to the platform. I also like the Just Enough Python section, as I’m not much of a Python programmer but being given guidance on relevant areas to concentrate on is very useful. Overall it gives a great overview of the Databricks platform and keeps it very relevant to the Lakehouse pattern. Plus it’s not heavy on the actual Spark implementation, very useful for people like me!

I also like to see the list of skills that are expected in the certification, this might sound obvious but I like to know what a “Databricks Data Engineer” might need to know (at least at this level of experience). E.G. if you want to work as an Azure Data Engineer, what skills might you need? What platforms and features should you use? The Microsoft DP-203 exam lists out all the expected skills and platforms and really gives you a focus (and boundary) on what you need to know – great for learning.

Let’s dive into the exam details and expected skills for the Databricks Certified Data Engineer Associate. I won’t be providing links to learning for each and every item, but rather some overall resources to look at.

Skills Measured

- Databricks Lakehouse Platform (24%)

- ELT with Spark SQL and Python (29%)

- Incremental Data Processing (22%)

- Production Pipelines (16%)

- Data Governance (9%)

Exam Details

- Time: 90 minutes

- Passing score: 70%

- Fee: $200

- Retake policy: Any time but must pay fee again

- Questions: 45

Learning Resources

- Azure Databricks documentation | Microsoft Learn

- Databricks Learning

- Databricks Certified Data Engineer Associate Practice Exams | Udemy

- Databricks Certified Data Engineer Associate – Preparation | Udemy

- Certification Overview video on Databricks Academy

- databricks-academy/data-engineering-with-databricks-english (github.com)

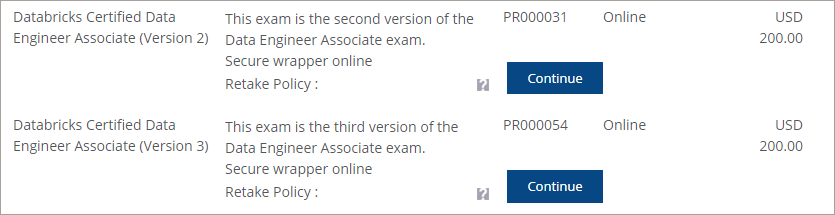

Exam Versions

As of November/December 2022 there are currently 2 versions of the exam, V2 and V3. I have listed the skills required for the V2 version of the exam as V3 has only just been released. On the Databricks Academy site, there are V2 and V3 courses to align with the exam version. Of note is that Structured Streaming and Auto Loader content has been dropped from V3. Thanks to Kevin Chant for clarifying the exam versions.

You will need to register with the exam platform to book the exam here.

The choice is yours in terms of which version to study for, the V2 exam is available until April 2023.

Databricks Academy

There are courses available on the Databricks Academy website. You’ll need to register with the academy here.

- Databricks Data Engineer Learning

- Data Engineering with Databricks V2

- Data Engineering with Databricks V3 (latest course)

Let’s now breakdown all the skills measures by category. This list was compiled directly from the Certification Overview Video on the Databricks Academy website. I find it very useful to list out all the skills measured from the overall category to the detailed items.

Databricks Lakehouse Platform (24%)

Lakehouse

Describe the components of the Databricks Lakehouse.

- Lakehouse concepts

- Lakehouse vs Data Warehouse

- Lakehouse vs Data Lake

- Data quality improvements

- Platform architecture

- High-level architecture & key components of a workspace deployment

- Core services in a Databricks workspace deployment

- Benefits of the Lakehouse to Data Teams

- Organisational data problems that Lakehouse solves

- Benefits to different roles in a Data team

Data Science & Engineering Workspace

Complete basic code development tasks using services of the Databricks Data Engineering & Data Science Workspace.

- Clusters

- All-purpose clusters vs Job clusters

- Cluster instances & pools

- DBFS

- Managing permissions on tables

- Role permissions and functions

- Data Explorer

- Notebooks

- Features & limitations

- Collaboration best practices

- Repos

- Suppored features & Git operations

- Relevance in CI/CD workflows in Databricks

Delta Lake

- General concepts

- ACID transactions on a data lake

- Features & Benefits of Delta lake

- Table Management & Manipulation

- Creating tables

- Managing files

- Writing to tables

- Dropping tables

- Optimisations

- Supported features & benefits

- Table utilities to manage files

ELT with Spark SQL & Python (29%)

- Build ELT pipelines using Spark SQL & Python

- Relational entities (databases, tables, and views)

- ELT (Creating tables, writing data to tables, transforming data, & UDFs)

- Manipulating data with Spark SQL & Python

Relational Entities

Leverage Spark SQL DDL to create and manipulate relational entities on Databricks

- Databases

- Create databases in specific locations

- Retrieve locations of existing databases

- Modify and delete databases

- Tables

- Managed vs External Tables

- Create and drop managed and external tables

- Query and modify managed and external tables

- Views and CTEs

- Views vs Temporary Tables

- Views vs Delta Lake tables

- Creating Views and CTEs

ELT Part 1: Extract & Load Data into Delta Lake

Use Spark SQL to extract, load, and transform data to support production workloads and analytics in the Lakehouse.

- Creating Tables

- External sources vs Delta Lake tables

- Methods to create tables and use cases

- Delta table configurations

- Different file formats and data sources

- Create Table As Select statements

- Writing Data to Tables

- Methods to write to tables and use cases

- Efficiency for different operations

- Resulting behaviours in target tables

ELT Part 2: Use Spark SQL to Transform Data

Use Spark SQL to extract, load, and transform data to support production workloads and analytics in the Lakehouse

- Cleaning Data with Common SQL

- Methods to deduplicate data

- Common cleaning methods for different types of data

- Combining Data

- Join types and strategies

- Set operators and applications

- Reshaping Data

- Different operations to transform arrays

- Benefits of array functions

- Applying higher order functions

- Advanced Operations

- Manipulating nested data fields

- Applying SQL UDFs for custom transformations

Just Enough Python

Leverage Pyspark for advanced code functionality needed in production applications.

- Spark SQL Queries

- Executing Spark SQL queries in Pyspark

- Passing Data to SQL

- Temporary Views

- Converting tables to and from DataFrames

- Python syntax

- Functions and variables

- Control flow

- Error handling

Incremental Data Processing (22%)

Incrementally process data includes:

- Structured Streaming (general concepts, triggers, watermarks)

- Auto Loader (streaming reads)

- Multi-hop Architecture (bronze-silver-gold, streaming applications)

- Delta Live Tables (benefits and features)

Structured Streaming

- General Concepts

- Programming model

- Configuration for reads and writes

- End-to-end fault tolerance

- Interacting with streaming queries

- Triggers

- Set up streaming writes with different trigger behaviours

- Watermarks

- Unsupported operations on streaming data

- Scenarios in which watermarking data would ne necessary

- Managing state with watermarking

Auto Loader

- Define streaming reads with Auto Loader and Pyspark to load data into Delta

- Define streaming reads on tables for SQL manipulation

- Identifying source locations

- Use cases for using Auto Loader

Multi-hop Architecture

- Bronze

- Bronze vs Raw tables

- Workloads using bronze tables as source

- Silver and Gold

- Silver vs gold tables

- Workloads using silver tables as source

- Structured Streaming in Multi-hop

- Converting data from bronze to silver levels with validation

- Converting data from silver to gold levels with aggregation

Delta Live Tables

- General concepts

- Benefits of using Delta Live Tables for ETL

- Scenarios that benefit from Delta Live Tables

- UI

- Deploying DLT pipelines from notebooks

- Executing updates

- Explore and evaluate results from DLT pipelines

- SQL syntax

- Converting SQL definitions to Auto Loader syntax

- Common differences in DLT SQL syntax

Production Pipelines (16%)

Build production pipelines for data engineering applications and Databricks SQL queries and dashboards, including:

- Workflows (Job scheduling, task, orchestration, UI)

- Dashboards (endpoints, scheduling, alerting, refreshing)

Workflows

Orchestrate tasks with Databricks Workflows

- Automation

- Setting up retry policies

- Using cluster pools and why

- Task Orchestration

- Benefits of using multiple tasks in jobs

- Configuring predecessor tasks

- UI

- Using notebook parameters in Jobs

- Locating job failures using Job UI

Dashboards

Using Databricks SQL for on-demand queries.

- Databricks SQL Endpoints

- Creating SQL endpoints for different use cases

- Query Scheduling

- Scheduling query based on scenario

- Query reruns based on interval time

- Alerting

- Configure notifications for different conditions

- Configure and manage alerts for failure

- Refreshing

- Scheduling dashboard refreshes

- Query reruns impact of dashboard performance

Databricks Governance (9%)

Unity Catalog

- Benefits of Unity Catalog

- Unity Catalog Features

Entity Permissions

- Team-based & User-based Permissions

- Configuring access to production tables and databases

- Granting different levels of permissions to users and groups

2 thoughts on “Databricks Data Engineer Associate Certification Overview”

Comments are closed.