The Reality of ALTER TABLE… in Fabric Warehouses

Overview

With the recent announcement from Microsoft that ALTER TABLE…ADD COLUMN is now supported in Fabric Warehouses, you might be forgiven for saying “So what? Database systems have had this feature for decades!” And you’d be correct. Well, bear in mind that the engine that powers the Fabric Warehouse started its public life 5 years ago as Synapse Serverless SQL Pools. This Polaris engine has seen a significant enhancement within the Fabric ecosystem to deliver the Lakehouse SQL Endpoint and Warehouse services.

As the Warehouse SQL engine uses the Delta functionality to handle transactions and Parquet file format to store data (at its simplest) by default, any functionality that is added to Warehouses must work with the Delta format. In this blog I’ll cover:

- ALTER TABLE support up until now

- New ALTER TABLE…ADD COLUMN

- Can we DROP and ALTER an existing column?

- Delta Versioning & what’s in the Delta log…

Thanks to Mark Pryce-Maher for suggesting I “go poke around the Delta logs…”

Previous ALTER TABLE Support

Up until recently (July 2024), the available functionality within Fabric Warehouses to alter tables was limited to keys and constraints. We could add Primary Keys, Unique Constraints, and Foreign Keys to Warehouse tables by using ALTER TABLE… There is no support for adding keys or constraints in the CREATE TABLE statement, it must be done via ALTER TABLE. It’s worth noting that these keys and constraints must be created with the NOT ENFORCED keyword.

In the example below we’ll create a table in the Warehouse and alter it to add a primary key.

--create table

CREATE TABLE dbo.DimProduct

(

ProductKey INT NOT NULL,

ProductName VARCHAR(255)

);

--add primary key

ALTER TABLE dbo.DimProduct

ADD CONSTRAINT PK_DimProduct PRIMARY KEY NONCLUSTERED (ProductKey) NOT ENFORCED;

Introducing ALTER TABLE…ADD column

As of July 2024 we are now able to issue an ALTER TABLE…ADD column statement to add a new nullable column to the table. All we need to do is issue a standard ALTER TABLE ADD statement and the new column will be added to the existing table.

ALTER TABLE dbo.DimProduct ADD ProductSubCategory VARCHAR(255);

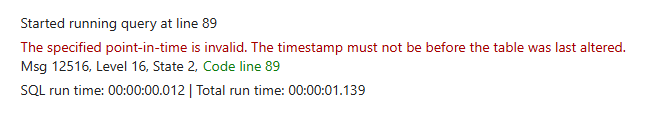

Time Travel Issue when altering table

It’s worth noting that when issuing an ALTER TABLE statement, it resets the date/time the table is being tracked to the date/time the table was altered. For the existing functionality of altering a table to add primary, unique, and foreign key constraints it probably did not surface that regularly as these are often implemented when a table is first created. But now we can add new columns, this issue may surface more regularly. One to watch out for.

Any Other Support for ALTER TABLE?

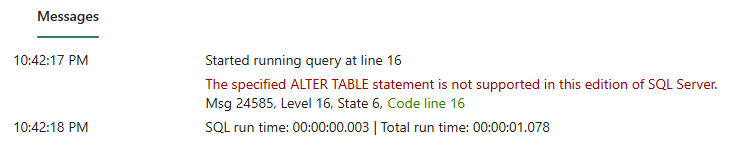

But the fun stops there (for the time being) as if we try any other ALTER TABLE statement to either DROP a column or alter the data type, it doesn’t work.

ALTER TABLE dbo.DimProduct ALTER COLUMN ProductSubCategory VARCHAR(100); ALTER TABLE dbo.DimProduct DROP COLUMN ProductSubCategory ;

For both commands above, we get the same error message:

Well, let’s look at why…

Delta Table Protocol Versioning

Delta tables have the concept of protocol versioning which will impact the functionality available when working with a Delta table. Let’s take a look at the Lakehouse now as we have control over the version/features of Delta that we can use. In comparison the Warehouse is a “closed box” in terms of the Delta table versioning available.

Create And Modify Delta Table

Let’s create a new Delta table in a Lakehouse using SQL in a Notebook, we’ll also alter the table and add a new column.

%%sql

CREATE TABLE dimproduct

(

ProductKey INT,

ProductName STRING

) USING DELTA

ALTER TABLE dimproduct ADD COLUMN ProductSubCategory STRING

We can try and alter the table and drop an existing column (or rename and existing column).

%%sql ALTER TABLE dimproduct DROP COLUMN ProductSubCategory

This doesn’t work and we’ll get an error stating:

“DROP COLUMN is not supported for your Delta table. Please enable Column Mapping on your Delta table with mapping mode ‘name’. You can use one of the following commands.

If your table is already on the required protocol version: ALTER TABLE table_name SET TBLPROPERTIES (‘delta.columnMapping.mode’ = ‘name’)

If your table is not on the required protocol version and requires a protocol upgrade:

ALTER TABLE table_name SET TBLPROPERTIES ( ‘delta.columnMapping.mode’ = ‘name’, ‘delta.minReaderVersion’ = ‘2’, ‘delta.minWriterVersion’ = ‘5’)“

We do have ways in PySpark to drop the column by re-writing the data from the table back and specifying to overwrite the schema. But that means reading and writing all the table data…

df = spark.sql("SELECT * FROM AIRBNB_RAW.dimproduct")

df.drop(df.ProductSubCategory) \

.write.format("delta") \

.mode("overwrite") \

.option("overwriteSchema", True) \

.save("Tables/dimproduct")

Upgrade Delta Table

Let’s upgrade the Delta table by using the code displayed in the original error message (pretty handy to be given the code in the actual error message itself!).

NB: it’s very much worth noting at this stage that although we can upgrade the Delta table in a Lakehouse to support column mapping, it will break metadata synchronization to the Lakehouse SQL Endpoint & Semantic Model as they are (like the Warehouse) no aware of the column mapping feature. Thanks to Frithjof Vassbø for reminding me!

%%sql ALTER TABLE dimproduct SET TBLPROPERTIES ( 'delta.columnMapping.mode' = 'name', 'delta.minReaderVersion' = '2', 'delta.minWriterVersion' = '5')

We can now drop the column with no errors.

%%sql ALTER TABLE dimproduct DROP COLUMN ProductSubCategory

What’s in the Delta Transaction Log?

So how does this work then? Let’s take a look inside the Delta log for the operations we carried out above. We’ll look at the log of the original table when it was created in the lakehouse, then look at the log after the table properties have been changed to enable column mapping. Then we’ll look at the Warehouse table log.

Create and Modify Delta Table

This is the Delta Transaction log for creating the table in the Lakehouse (I’ve omitted parts of the log to make it slightly more readable). In SchemaString you can see the fields in the table and the associated metadata name, type, and nullable.

{

"protocol": {

"minReaderVersion": 1,

"minWriterVersion": 2

}

}

{

"metaData": {

"id": "f439cc23-498d-4713-80e8-5f732032b0ab",

"format": {

"provider": "parquet",

"options": {}

},

"schemaString": "{\"type\":\"struct\",\"fields\":[{\"name\":\"ProductKey\",\"type\":\"integer\",\"nullable\":true,\"metadata\":{}},{\"name\":\"ProductName\",\"type\":\"string\",\"nullable\":true,\"metadata\":{}}]}",

"partitionColumns": [],

"configuration": {},

"createdTime": 1721598597504

}

}

After the ALTER TABLE ADD column statement was issued:

{

"commitInfo": {

"timestamp": 1721641468097,

"operation": "ADD COLUMNS",

"operationParameters": {

"columns": "[{\"column\":{\"name\":\"ProductSubCategory\",\"type\":\"string\",\"nullable\":true,\"metadata\":{}}}]"}

}

{

"schemaString": "{\"type\":\"struct\",\"fields\":[{\"name\":\"ProductKey\",\"type\":\"integer\",\"nullable\":true,\"metadata\":{}},{\"name\":\"ProductName\",\"type\":\"string\",\"nullable\":true,\"metadata\":{}},{\"name\":\"ProductSubCategory\",\"type\":\"string\",\"nullable\":true,\"metadata\":{}}]}",

"partitionColumns": [],

"configuration": {},

"createdTime": 1721598597504

}

}

We can see the new column has been added and is present in the SchemaString section.

Now lets see the Delta log after we upgrade the table, we get some interesting metadata including:

- {\”delta.columnMapping.id\”:1,\”delta.columnMapping.physicalName\”:\”ProductKey\”}}

- {\”delta.columnMapping.id\”:2,\”delta.columnMapping.physicalName\”:\”ProductName\”}}

- {\”delta.columnMapping.id\”:3,\”delta.columnMapping.physicalName\”:\”ProductSubCategory\”}}

{

"schemaString": "{\"type\":\"struct\",\"fields\":[{\"name\":\"ProductKey\",\"type\":\"integer\",\"nullable\":true,\"metadata\":{\"delta.columnMapping.id\":1,\"delta.columnMapping.physicalName\":\"ProductKey\"}},{\"name\":\"ProductName\",\"type\":\"string\",\"nullable\":true,\"metadata\":{\"delta.columnMapping.id\":2,\"delta.columnMapping.physicalName\":\"ProductName\"}},{\"name\":\"ProductSubCategory\",\"type\":\"string\",\"nullable\":true,\"metadata\":{\"delta.columnMapping.id\":3,\"delta.columnMapping.physicalName\":\"col-f772a929-55a7-477c-924c-5dc1b8aeb86e\"}}]}",

"partitionColumns": [],

"configuration": {

"delta.columnMapping.mode": "name",

"delta.columnMapping.maxColumnId": "3"

},

"createdTime": 1721643187063

}

}

This is how operations to alter existing columns can now work, by using the delta.columnMapping.id property, which will have a unique number assigned to each column.

Warehouse

If we take a look at the Delta log of the Warehouse table, we can see there is no columnMapping metadata present.

{

"protocol": {

"minReaderVersion":1,

"minWriterVersion":1

}

}

{"metaData": {

"id":"18F30AE4-0ACA-4503-A8FD-63635C5C55D5",

"name":"DimProduct",

"format": {

"provider":"parquet",

"options":{}

},

"schemaString":"{\"type\":\"struct\",\"fields\":[{\"name\":\"ProductKey\",\"type\":\"integer\",\"nullable\":false,\"metadata\":{}},{\"name\":\"ProductName\",\"type\":\"string\",\"nullable\":true,\"metadata\":{}}]}",

"partitionColumns":[],"configuration":{}}}

Let’s finally look at the properties of the table in the Warehouse and Lakehouse using DESCRIBE.

#Lakehouse table

display(spark.sql("DESCRIBE DETAIL delta.`abfss://<onelake_lakehouse_table_location>/Tables/dimproduct`"))

#Warehouse table

display(spark.sql("DESCRIBE DETAIL delta.`abfss://<onelake_warehouse_table_location>/Tables/dimproduct`"))

I’ve only select a few columns from the DESCRIBE statement and added Table Type to show where the properties are from. You can see that the Warehouse table has no columnMapping properties, the minReaderVersion is set to 1 and the minWriteVersion is set to 2.

| Table Type | format | properties | minReaderVersion | minWriteVersion |

|---|---|---|---|---|

| Lakehouse | delta | {“delta.columnMapping.mode”:”name”,”delta.columnMa…” delta.columnMapping.mode: “”name”” delta.columnMapping.maxColumnId: “”3″” | 2 | 5 |

| Warehouse | delta | {} | 1 | 1 |

All this means is that the Warehouse currently doesn’t support the Delta column mapping feature, but as we’ve seen, Fabric is a fast evolving product so keep an eye out!